How to annotate your Image Classification

Dataset at minimum cost?

In this demo, we'll walk you through some key decisions for annotating an image classification dataset as a task designer aiming to minimize cost. We start from a given unlabeled dataset and a set of classes of interest. You are allowed to choose the expertise of workers to hire. Do you add gold standard questions for annotators? Which aggregation method do you use? If a vision (henceforth, CV) model is used, how frequently do you update it? etc.

Take a glance at your dataset

Now you have an unlabeled dataset of 21740 images and you want to hire some workers to help you annotate the image labels.

After some data cleaning, you end up with 16(+1) classes of interest and are ready to start annotating.

Here are the classes you have:

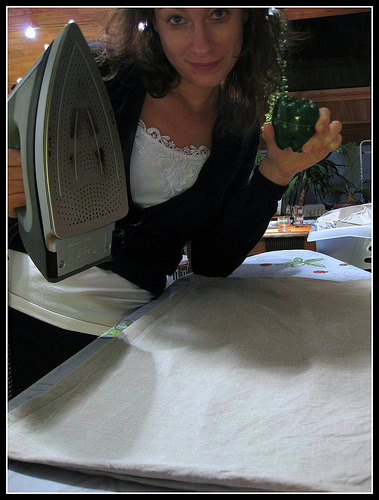

- Vacuum Cleaner

- Computer Keyboard

- Bottlecap

- Milk Can

- Iron

- Mortarboard

- Bonnet

- Sarong

- Modem

- Tub

- Purse

- Cocktail Shaker

- Rotisserie

- Jean, Denim

- Dutch Oven

- Football Helmet

- Other

To make it more realistic, we will add around 10% additional images from other classes as distractions.

Worker Configuration

How to Aggregate labels

Results

Performance (compared with ImageNet ground truth)

Your labels:

Green bordered images are annotated less than 3 times. Red bordered images are annotated more than 3 times.